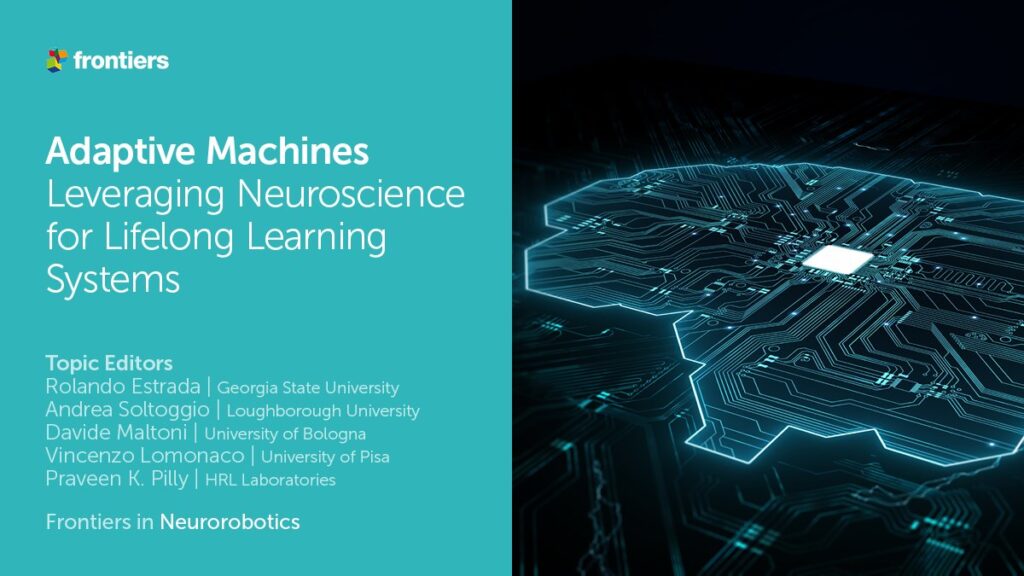

I’m happy to advertise a new Research Topic in Frontiers available at:

Adaptive Machines: Leveraging Neuroscience for Lifelong Learning Systems

Deadline for paper submission: 30 September 2021.

Abstract:

Artificial intelligence has always sought inspiration in the brain. Artificial neural networks (ANNs), in particular, were modeled after their biological counterparts (biological neural networks, BNNs). However, state-of-the-art deep networks are drastically different from BNNs. Their architectures (at both the single-neuron and macro levels), learning algorithms, and failure conditions share little in common with brain circuit dynamics.

In particular, state-of-the-art deep learning is optimized for single, well-defined, static tasks. Deep networks struggle to learn multiple or evolving tasks over time (i.e., continual learning) or to adjust their processing based on environmental conditions. They are also less modular than BNNs, and thus less energy efficient, and utilize little-to-no feedback signals.

We believe that deep networks suffer from these limitations because their modeling of brain dynamics is too superficial. Modeling more sophisticated neural mechanisms is therefore key for deep networks to achieve continual or lifelong learning and to cope with open-ended, dynamic environments.

The goal of this Research Topic is to publish novel models and/or algorithms that expand the capabilities of deep learning (e.g., achieve better continual learning) by incorporating additional properties of BNNs. We are also interested in neuroscience research that elucidates mechanisms that can be leveraged by the AI community.

Neuroscience has uncovered a wide range of learning and regulatory mechanisms, at scales ranging from single molecules to the entire nervous system, that help the brain learn, remember, and adapt. These include replay (systems-level consolidation), neuromodulation, neurogenesis, neuroevolution, attention, homeostatic plasticity, and synaptic consolidation. There exist some deep learning techniques motivated by these mechanisms (e.g., experience replay or attention networks), but they have rarely been used for lifelong learning. As such, our focus will be on approaches that leverage brain-like principles—ideally biologically well-grounded—to solve problems currently beyond the capabilities of the state of the art. We will also welcome neuroscience research that helps to elucidate the brain’s mechanisms, in the hope that they can be used by the next generation of machine learning models.

The scope of this Research Topic covers:

(1) biologically inspired techniques in deep learning

(2) neuroscience research on how the brain facilitates learning and adapts to different contexts

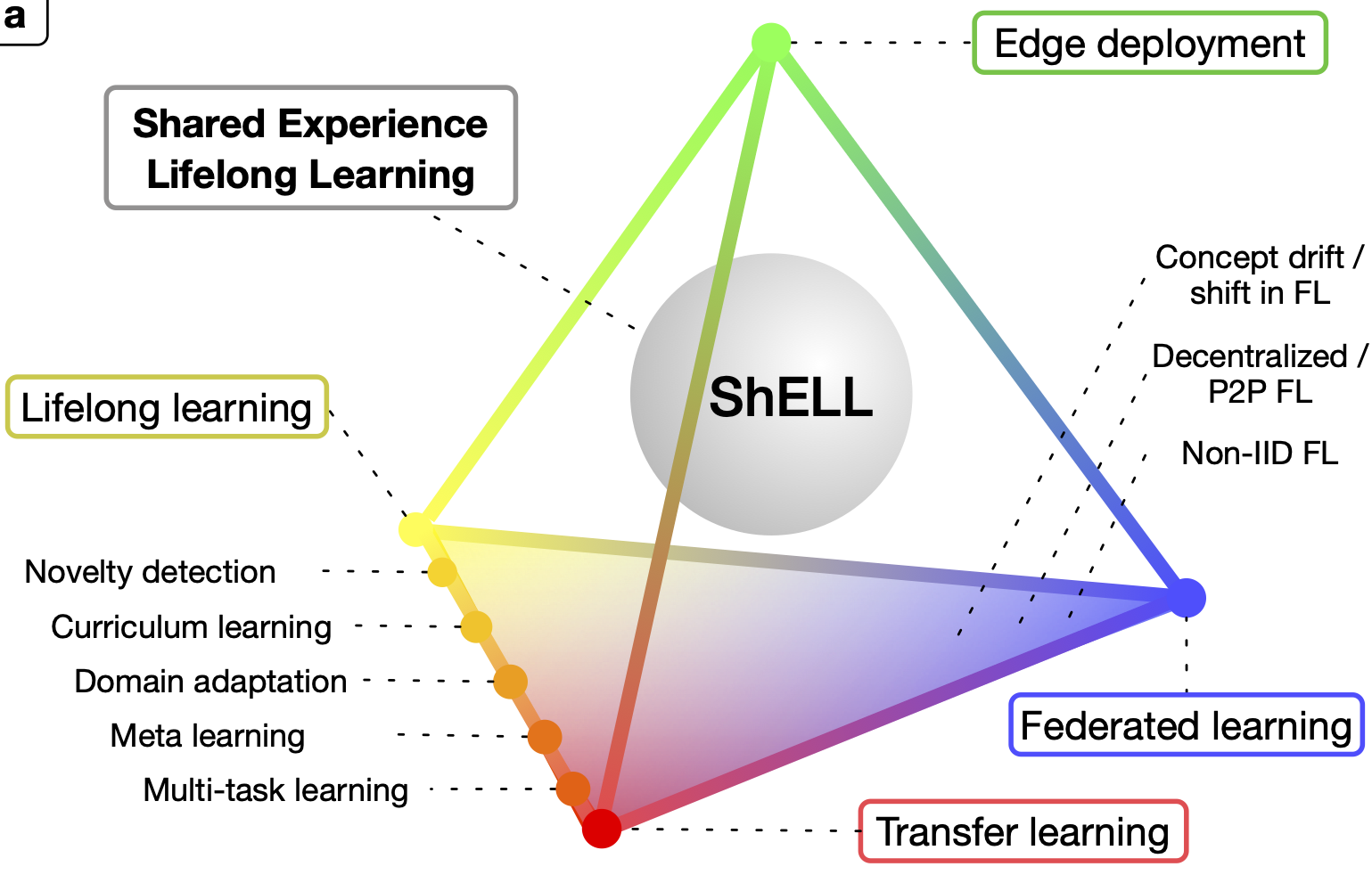

We seek to address continual or lifelong learning, transfer learning, and other ML scenarios that go beyond the traditional, train-then-test learning paradigm. Since machine learning is an experimental field, a paper must include experimental results to be accepted; however, we encourage authors to include theoretical analyses of their methods.

We aim to collect the following types of manuscripts: Original Research and Brief Research Reports.

Since the goal of this Research Topic is to publish highly novel, experimentally grounded work, we are not interested in Reviews (Systematic, Policy and Practice, etc.), General Commentaries, or Opinions. In addition, since the editorial board does not include medical experts, we cannot accept Clinical Trials or Study Protocols.

Dr. P. K. Pilly is working as Senior Research Scientist for the company HRL Laboratories, LLC. Dr. P. K. Pilly has pending patents on continual learning.

The other Topic Editors declare no competing interests with regards to the Research Topic.

Keywords: Deep Learning, Continual Learning, Lifelong Learning, Neuroscience, Fast Adaptation

Important Note: All contributions to this Research Topic must be within the scope of the section and journal to which they are submitted, as defined in their mission statements. Frontiers reserves the right to guide an out-of-scope manuscript to a more suitable section or journal at any stage of peer review.